Or, Baillargeon vs. Csibra

Here's the question: at ages when infants both have physical expectations as well as can use social (pedagogical) cues, is there a period when one cue takes precedence over the other? And here is a way of testing it.

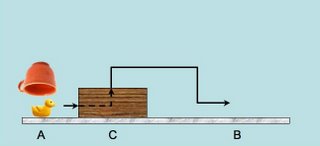

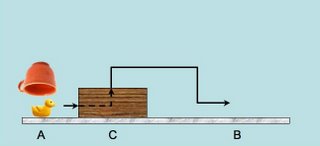

This is a task that infants can do: (ref?):

Basically, there is a rubber ducky on the table, which has an occluder. A cup covers the ducky (at point

A), moves/slides behind the occluder, the cup lifts off, goes past the occluder and comes down at point

B. At this point, where should the rubbber ducky be?

Babies think that the rubber ducky must lie behind the occluder at point

C.

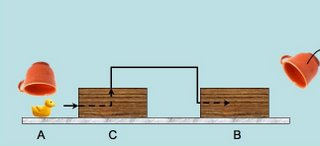

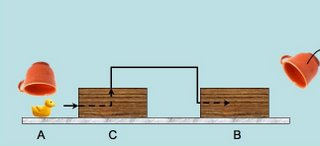

Now for the variation: first, there are two occluders on the table.

The cup comes down, slides behind occluder 1 at point

C, lifts up, goes behind the occluder at point B, and then the cup lifts up and goes away to leave just the two occluders. This so far was the Renee part; now for the Csibra part.

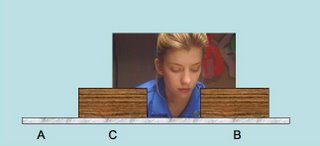

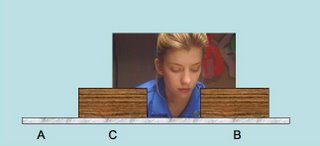

Imagine, that a human observer gazes excitedly behind the occluder at position

B.

(ok, so she doesn't look terribly excited.. I just got that somewhere from the Google image search)

According to the Gergo line, the infant should expect the object at position

B. But, according to the Renee line, the infant should expect the object at position

C. What might actually happen when the occluders came down and the ducky was at position

C vs. position

B?

First, given the Baillargeon results, one might expect, given the Gergo theory, that the infants show more surprise if the experimenter looked at the position

B vs the position

C. This would be the complementary experiment to the previous Gergo experiment, and would tie in physical expectation with social expectation.

So, what this would show is not only that infants expect there to be objects where they look, but

they also expect humans to look where there is an object. This would be a nice validation of the pedagogical stance.

Next, one can see what happens when the occluders are dopped; there are two possibilities:

1) The object is where the experimenter was looking (position

B).

2) The object is where it is supposed to be, given physical constraints.

The question is: in which condition would the infant look longer? If it looks longer when the object is at position

B, it would imply that the physical rules win. However, if it looked longer when the object is at

C, it would mean that, given the social cue, the infant had updated its object file representation, and now expected an object at

B.